An Inconvenient Probability v5.12

Bayesian analysis of the probable origins of Covid. Quantifying "friggin' likely"

[This version is substantially changed from V4 by using more relevant priors better integrated with the basic observations for simplicity. The lack of independence between when/where/what features under the lab leak hypothesis is now made explicit from the start rather than patched in. Just as I was about to post, new data on planned sequence features became available, so this update coincidentally includes not only some change in form but also an appreciable change in the odds. 5.11 dropped a factor that was based on my misreading of a paper. 5.12 uses an improved method of allowing for sequences in related viruses. Some of the introductory remarks are anachronistic, but I’ve written about the current politics elsewhere.

The method remains explicitly ready for correction based on improved reasoning or new evidence. [Last notable revision 5.11→5.12 11/10/2025]

Introduction

Early on in the Covid pandemic, I took a preliminary look at the relative probabilities that SARS-CoV-2 (SC2) came from some sort of lab leak vs. more traditional direct zoonotic paths. Although zoonotic origins have been more common historically, the start in Wuhan where the suspect lab work was concentrated left the two possibilities with comparable probabilities. That result seemed convenient because it left strong motivation both to increase surveillance against future zoonosis and to stringently regulate dangerous lab work. Since then there have been major changes in circumstances that change both the balance of the evidence and the balance of the consequences of looking at the evidence. I think these warrant taking another look.

The origins discourse has become increasingly polarized with different opinions often tied to a package of other views. To avoid having some readers turn away out of aversion to some views that have become associated with the suspicion of a lab leak, it may help to first clarify why I think the question is important and to name some of the claims that I’m not making before plunging into the more specific analysis of probabilities.

I’m now more concerned about dangerous work being accelerated rather than useful work being over-regulated. This is not a specifically Chinese problem. Work with dangerous new viruses has been done, is underway, or is planned in Madison, the Netherlands, Australia, and South Korea, with some questionable work in Boston. I’m not suggesting that the US or other western governments should officially say that they think SC2 started from a Wuhan lab. That would make it harder to work with China on the crucial issues of global warming and even on international pathogen safety regulation itself. (I just signed a letter “urging renewal of US-China Protocol on Scientific and Technological Cooperation”.) I’m definitely not endorsing the cruel opposition to strenuous public health measures that seems to have become associated with skepticism about the zoonotic account.

In what follows I will try to objectively calculate the odds that SC2 came from a lab, in the hope that will be useful to anyone thinking about future research policy. The underlying motivation for this effort has been eloquently described by David Relman, who has also provided a nice non-quantitative outline of the general types of evidence supporting different SC2 origins hypotheses.

Looking forward, what we really care about is estimating risks. We already know from experience that the risks of zoonotic pandemics are significant. Are the risks of some types of lab work comparably significant? We shall use some prior estimates of those risks on the way to answering a more concrete question– does it look like SC2 came from a lab? If the answer is “probably not”, then it’s at least possible that the prior estimates of significant lab risk may have been overstated, although the evidence for that would be skimpy. If the answer is “probably yes” that indicates that the prior estimates of significant risk should not have been ignored.

The method used may also help readers to evaluate other important issues without relying too much on group loyalties. The method is explicitly ready for correction based on improved reasoning or new evidence. Our method will be robust Bayesian analysis, a systematic way of updating beliefs without putting too much weight on either one’s prior beliefs or the new evidence. Bayesian analysis does not insist that any single piece of evidence be “dispositive” or fit into any rigid qualitative verbal category. Some hypotheses can start with a big subjective head-start, but none are granted categorical qualitative superiority as “null hypotheses” and none have to carry a qualitatively distinct “burden of proof”. Each piece of evidence gets some quantitative weight based on its consistency with competing hypotheses. The consistency with evidence then allows us to substantially change our prior guesses so that the final probability estimate is not just a recycling of our initial opinions.

In practice there are subjective judgments not only about the prior probabilities of different hypotheses but also about the proper weights to place on different pieces of evidence. I will use hierarchical Bayes techniques to take into account the uncertainty in the impacts of different pieces of evidence and “Robust Bayesian analysis” to allow for the uncertainty in the priors.

There have been about a dozen more-or-less-published Bayesian analyses of SC2 origins, other than my own very preliminary inconclusive one. The ones that attempt to be comprehensive and were published before the first version of this blog all came to conclusions similar to the one I shall reach here. Starting in early 2024 several have come out favoring a zoonotic spillover at a market. All are discussed in Appendix 1.

I will focus on comparing the probability that SC2 originated in wildlife vs. the probability that it originated in work similar to that described in the 2018 DEFUSE grant proposal submitted to the US Defense Advanced Research Project Agency from institutions that included the University of North Carolina (UNC), the Duke National University of Singapore, and the EcoHealth Alliance (EHA) as well as the Wuhan Institute of Virology (WIV). (For brevity I’ll just refer to this proposal as DEFUSE.) Although DEFUSE was not funded by DARPA, anyone who has run a grant-supported research lab knows that work on yet-to-be-funded projects routinely continues except when it requires major new expenses such as purchasing large equipment items. Some closely related work was described shortly later in an NIH grant application from EHA and WIV and in a grant to WIV from the Chinese Academy of Sciences. When Der Spiegel asked Shi Zhengli, the lead coronavirus researcher at WIV, whether the work had started anyway she responded “I don’t want to answer this question…”

I will not discuss any claims about bioweapons research. It is not exactly likely that a secret military project would request funding from DARPA for work shared between UNC and WIV.

My analysis will not make use of the rumors of roadblocks around WIV, cell-phone use gaps, sick WIV researchers, disappearances of researchers, etc. That sort of evidence might someday be important but at this point I can’t sort it out from the haze of politically motivated reports. Mumbled inconclusive evidence-free executive summaries from various agencies are even less useful. I will discuss in passing two recent U.S. government funding decisions that could potentially provide weak evidence concerning the actual probabilities as seen by those with inside information. The biological and geographic data are much more suited to reliable analysis.

The main technical portions will be unpleasantly long-winded since for a highly contentious question it’s necessary to supply supporting arguments. Although parts may look abstract to non-mathematical readers, all the arguments will be accessible and transparent, in contrast to the opaque complex modeling used in some well-known papers. For the key scientific points I will provide standard references. At some points I bolster some arguments with vivid quotes from key advocates of the zoonotic hypothesis, providing convenient links to secondary sources. The quotes may also be obtained from searchable .pdf’s of slack and email correspondence.

The outline is to

1. Give a short non-technical preview.

2. Introduce the robust Bayesian method of estimating probabilities, along with some notation.

3. Discuss a reasonable rough consensus starting point for the estimation, i.e. the prior odds for a pandemic of this sort starting in Wuhan in 2019 via routine processes unrelated to research or via research-related activities.

4. Discuss whether the main papers that have claimed to demonstrate a zoonotic origin via the wildlife trade should lead us to update our odds estimate.

5. Update the odds estimate using a variety of other evidence, especially sequence features.

6. Present brief thoughts about implications for future actions.

Preview

I will denote three general competitive hypotheses:

ZW: zoonotic source transmitted via wildlife to people, suspected via a wet-market.

ZL: zoonotic source transmitted to people via lab activities sampling, transporting or otherwise handling viruses.

LL: a laboratory-modified source, leaked in some lab mishap.

At points I’ll divide the ZW hypothesis into two branches ZWM involving a spillover from an intermediate host at the Huanan Seafood Market (HSM) and all other ZW’s, ZWO. This division is useful because there has been a substantial amount of evidence cited (tending both for and against) that is pertinent only to the ZWM sub-hypothesis.

The viral signatures of ZW and ZL would be similar, so the ratio of their probabilities would be estimated from knowledge of intermediate wildlife hosts, of the lab practices in handling viral samples, and detailed locations of initial cases. Demaneuf and De Maistre wrote up a careful Bayesian discussion of that issue in 2020, before the DEFUSE proposal for modifying coronaviruses was publicly known. They concluded that the probability of ZW, i.e. P(ZW), and the probability of ZL, i.e. P(ZL), were about equal.

Much of their analysis, particularly of prior probabilities, is close to the arguments I use here, but written more gracefully and with more thorough documentation. They use a different way of accounting for uncertainties than I do, but unlike some other estimates their method is transparent and rational. Here I’ll focus on comparing the probability P(ZW) to that of the LL lab account, P(LL), because sequence data point to a lab involvement in generating the viral sequence, so that P(ZL) will itself be somewhat smaller than P(LL). (I’ve added Appendix 5 to discuss the ZL probability.)

Ratios of probabilities such as P(LL)/P(ZW) are called odds. It’s easier to think in terms of odds for most of the argument because the rule for updating odds to take into account new evidence is a bit simpler than the rule for updating probabilities.

I’ll start with odds that heavily favor ZW since historically most new epidemics do not come from research activities. Then I’ll update using several important facts. The most basic what/where/when facts are that SC2 is a sarbecovirus that started a pandemic in Wuhan in 2019. Wuhan is the location of a major research lab that had not long before the outbreak submitted the DEFUSE grant proposal that included plans to collect bat sarbecoviruses and modify them in ways later found in SC2. That location, timing, and category of virus could also have occurred by accidental coincidences for ZW, but we shall see that it’s not hard to approximately convert the coincidences to factors objectively increasing the odds of LL. Here’s a beginning non-technical explanation of how the odds get updated.

I’ll start with a consensus view, that the prior guess would be that overall P(LL) is much less than P(ZW). That corresponds to the standard idea that you would call ZW the null hypothesis, i.e. the boring first guess. Rather than treat the null as qualitatively sacred I’ll just leave it as initially quantitatively more probable by a crudely estimated factor.

Now we get to the simple part that has often been either dismissed or over-emphasized. Both P(ZW) and P(LL) come from sums of tiny probabilities for each individual person. P(LL) comes mostly from a sum over individuals in Wuhan. P(ZW) comes from a sum over a much larger set of individuals spread over China and southeast Asia. Since we know with confidence that this pandemic started in Wuhan, restricting the sum of individual probabilities to people around Wuhan doesn’t reduce the chances for LL much but eliminates most of the contributions to the chances for ZW. Wuhan has less than 1% of China’s population, so ~99% of the paths to ZW are crossed off. That means we need to increase whatever P(LL)/P(ZW) odds we started with by about a factor of 100.

Further updates following the same logic come from other data. A natural outbreak could come from any of a diverse collection of pathogens, but this outbreak matched the specific subcategory of virus being studied in the Wuhan labs. Another update will come from a special genetic sequence that codes for the furin cleavage site (FCS) where the UNC-WIV-EHA DEFUSE proposal suggested adding a tiny piece of protein sequence to a natural coronavirus sequence. The tiny extra part of SC2’s spike protein, the FCS that is absent in its wild relatives, has nucleotide coding that is rare for related natural viruses but seems less peculiar for the most relevant known designed sequences, the mRNA vaccines. A similar update involves a pattern of how the virus sequence can be cut into pieces by some lab restriction enzymes, a pattern closely matching plans in DEFUSE drafts but quite rare in natural viruses. We can again make approximate numerical estimates of how much more coincidental such features seem for a natural origin than for a lab origin.

Even if we start with a generously high but plausible preference for ZW, once the evidence-based updates are done we’ll have P(LL) much larger than P(ZW). P(ZW) will shrink to less than 1%, and is saved from shrinking much further only by allowance for uncertainties.

An analogy may help clarify the method for those who have never used it before. Say that you’ve been hanging out in your house for a few hours. At some point you turn on a light in the kitchen. A few minutes after that you smell burning electrical insulation in the kitchen. Even though fires are not usually caused by faulty electrical wiring in kitchens, you would rightly suspect that this one was. Bayesian reasoning allows you to systematically express and evaluate the intuitions behind that suspicion.

This openly crude and approximate form of argument may alarm readers who are not accustomed to the Fermi-style calculations routinely used by physicists. In this sort of calculation one doesn’t worry much about minor distinctions between similar factors, e.g. 8 and 12, because the arguments are not generally that precise. Sometimes the large uncertainties in such a calculation render the conclusion useless, but this turns out not to be one of those cases.

Methods

The standard logical procedure to calculate the odds, P(LL)/P(ZW), is to combine some rough prior sense of the odds with judgments of how consistent new pieces of evidence are with the LL and ZW hypotheses. Bayes’ Theorem provides the rule for how to do this. (See e.g. this introduction.)

One starts with some roughly estimated odds based on prior knowledge:

P0(LL)/P0(ZW). Then one updates the odds based on new observations. The conditional probabilities that you would see those observations if a hypothesis (either LL or ZW) were true are denoted P(observations|LL) and P(observations|ZW), called the “likelihoods” of LL and ZW. Each conditional probability is evaluated without regard to whether the hypothesis itself is probable or not.

Rather than categorizing each unusual feature as either a smoking gun or mere coincidence, Bayesian analysis assigns each feature a quantitative odds update factor. Events that are unusual under some hypothesis do not rule out that hypothesis but they do constitute evidence against it if the events are more likely under a competing hypothesis. Our task here is to try to turn each qualitative surprise into a rough quantitative likelihood ratio.

Assuming these likelihoods are themselves known, Bayes’ Theorem tells us the new “posterior” odds are

P(LL)/P(ZW) = (P0(LL)/P0(ZW))*(P(observations|LL)/P(observations|ZW)).

In practice, it’s hard to reason about all the observations lumped together, so we break them up into more or less independent pieces, to the extent that can be done, and do the odds update using the product of the likelihood ratios for those pieces.

P(LL)/P(ZW) =

(P0(LL)/P0(ZW))*(P(obs1|LL)/P(obs1|ZW))*(P(obs2|LL)/P(obs2|ZW))…… *(P(obsn|LL)/P(obsn|ZW))

At several key points we’ll see that several aspects of the observations would not be close to independent under one or the other of the hypotheses, so we’ll be careful in those cases not to break up the likelihoods into separate factors.

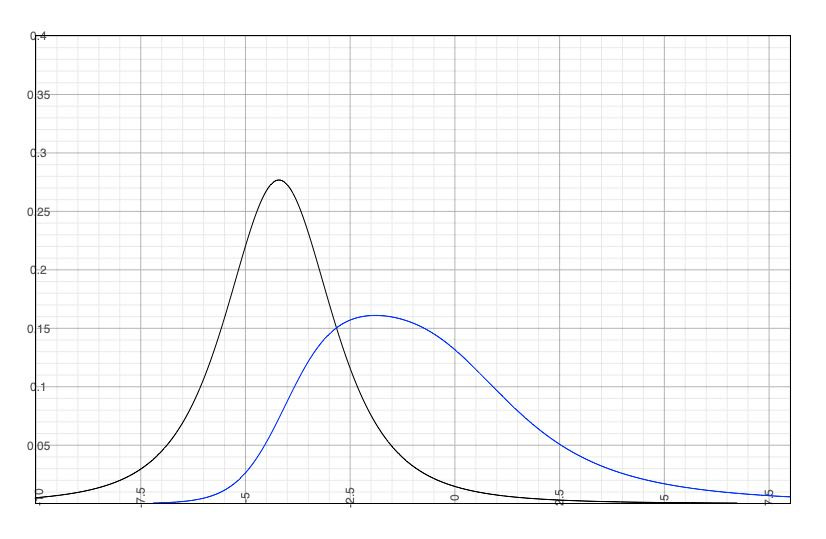

At this point it’s necessary to recognize that not only the prior odds

P0(LL)/P0(ZW) but also the likelihoods involve some subjective estimates. In order to obtain a convincing answer we need to include some range of plausible values for each likelihood ratio. As we shall see, inclusion of the uncertainties is important because realistic recognition of the uncertainties will tend to pull the final odds back from an extreme value towards one.

Once our odds become products of factors of which more than one have some range of possible values, our expected value for the product is no longer equal to the product of the expected values. Since the expected value of a sum is just the sum of the expected values it’s convenient to convert the product to a sum by taking the logarithms of all the factors.

ln(P(LL)/P(ZW)) = ln(P0(LL)/P0(ZW))+ln(P(obs1|LL)/P(obs1|ZW)) … +ln(P(obsn|LL)/P(obsn|ZW)) = logit0 + logit1 … +logitn

where “logit” is used for brevity. The logarithmic form has the added advantage that the typical error bars around the best estimate are often about symmetrical.

At each stage I will include a crude estimate of the log of each likelihood and of its uncertainty expressed as a standard error of that log. Standard hierarchical Bayes techniques then down-weight factors with big uncertainty. The resulting down-weighted logits for factors with big uncertainty (si) tend to be smaller than the initial crude estimates of the logs of the likelihood ratios(Li) The technique used is described in Appendix 3. The results are not sensitive to the details because I do not use likelihood ratios with large si. The down-weighted results are the logits used.

Once the net likelihood factor is estimated, taking uncertainties into account, we still have a distribution of plausible prior odds. This can also be treated by assuming

a probability distribution around the point estimate of the log odds. The final odds will be obtained from integrating the net probabilities, including the net likelihood factor, over that distribution. This distribution is wide enough to make its form, not just its standard deviation, potentially important for the result.

The treatments of the priors and the likelihoods look superficially similar, but are not equivalent. Uncertainty in the likelihoods leads to discounting the likelihood ratios but not to discounting the priors. Uncertainty in the priors leads to discounting both. Thus observations with uncertain implications leave the priors untouched but highly uncertain priors can make fairly large likelihood ratios irrelevant. Since in this case the priors tend toward ZW but the likelihoods tend more strongly toward LL the inclusion of each type of uncertainty will substantially reduce the net odds favoring LL.

Often our hypotheses can be broken up into sub-hypotheses. For example, ZW can occur via market animals or directly from a bat, among other possibilities. LL can occur at WIV or at the Chinese CDC. There’s nothing wrong or contradictory in summing probabilities over sub-hypotheses. In calculating these contributions, however, it is crucial to separately multiply the chain of observational factors for each contributing sub-hypothesis and then add the resulting probabilities rather than adding the probabilities at each observational step and then multiplying. For example, if a hypothesis is that some cookies were stolen by a team of animals consisting of a snake and a pig knowing that that team can get through a small opening and can knock down a wall does not give any probability that they stole a cookie which could only be reached by getting through a small hole and then knocking down a wall. Neither sub-hypothesis (snake or pig) works although a coarse grained look would say that the snake/pig team has good chances of both hole-threading and wall-smashing. This issue will come up several times in important if less extreme contexts.

Along the way we shall see several observed features that perhaps should give important likelihood factors that I think tend to favor LL but for which there’s substantial uncertainty and thus little net effect. I will just drop these to avoid cluttering the argument with unimportant factors. I will include some small factors when the sign of their logit is unambiguous, e.g. a factor from the lack of any detection of a wildlife host. I will not omit any factors that I think would favor ZW. I’ll take care not to penalize ZW’s odds for features that might seem peculiar under the ZW hypothesis but that would seem needed for a zoonotic virus to be able to cause a notable pandemic.

My analysis differs from some others in one important respect. The others treat “DEFUSE” as an observation and try to estimate something like P(DEFUSE|LL)/P(DEFUSE|ZW). I don’t see how to do that. Instead I treat DEFUSE (along with the closely related follow-up grants) as a way to define a particular branch of the LL hypothesis. Since it’s narrower than generic LL, it should be easier to find observations that don’t fit it, i.e. have low likelihoods. The flip side of that is that observed features that it does fit give higher likelihoods than they would for generic LL. Picking a reasonable prior for a leak of something stemming from DEFUSE-style work given the existence of the proposal will require looking more at prior estimates of lab leak probabilities rather than at the sparse history of previous pandemics. In earlier versions I used those estimates as a sanity check on more broadly inferred priors but here I switch those roles.

We will use priors based on attempts to predict yearly rates of spillovers from ongoing lab work based on prior knowledge of lab events. To the extent that those estimates are already reliable our whole exercise here is only of historical interest, since those estimates tell us directly to take lab risks seriously regardless of the source of this particular pandemic. Our priors, however, are far from precise, so looking at the evidence for this one pandemic will help us refine them. For practical purposes, we are only interested in estimating the source of this one pandemic in order to check the credibility of the warnings.

The quantitative arguments

Prior odds

Let’s start with the fuzzy generic prior odds just to get a rough feel of what’s plausible. In my lifetime, starting in 1949, there have been seven other significant (>10k dead) worldwide pandemics. At least one pandemic (1977 A/H1N1) came from some accident in dealing with viral material. (Nick Patterson tells me that a cattle disease, bluetongue, had a similar outbreak from some human-preserved samples.) So pandemics originating in research activity are not vanishingly rare. It’s more likely that the 1977 flu pandemic stemmed from a large-scale vaccine trial than a small-scale lab accident, so that broad background does little to pin down the prior probability of smaller-scale research triggering a pandemic. For that we need to turn to more specific studies of lab accidents.

Before looking numerically at the LL probabilities, let’s look at the competing ZW background. I shall bypass the contentious question of how often people are infected by zoonotic viruses and what fraction of those exposures lead to disease that transmits easily in people since only the product of those two highly uncertain numbers matters, and we have sufficiently reliable data on the product. A tabulation from 2014 of important pathogens emerging in China since the 1950’s lists 19 different ones, including one sarbecovirus—SC1, the original SARS. The somewhat arbitrary choice of which pathogens to include in the list will not be relevant after the next step, in which we specify that SC2 is a sarbecovirus. The choice just moves a factor between priors and likelihoods without changing the net odds result.

From that rate one can roughly estimate that the probability of a significant new pathogen in any year, e.g. 2019, would be

P0(2019, ZW) = ~ 1/3.

That’s higher than the probability of some widespread disease emerging from a research incident in any year, justifying the general view that if one must choose a favored “null hypothesis” for a generic new pathogen the choice would be ZW.

Now let’s turn to lab leaks. The number of labs doing risky research has grown dramatically in recent decades. For example, Demaneuf and De Maistre show a growth of a factor of ten in the number of BSL-3 labs in China between 2000 and 2020. The book Pandora’s Gamble amply documents that pathogen lab leaks are common, including in the US. A more recent summary describes over 300 lab-acquired infections and 16 lab pathogen escapes over a two-decade period. These are almost always caught before the diseases spread. Nevertheless, in 2006, the World Health Organization warned that the most likely source of new outbreaks of SC1 would be a lab leak, confirming that the danger of lab leaks was large according to consensus expert opinion. In 2012, Klotz and Sylvester warned of lab leak pandemic dangers in a Bulletin of the Atomic Scientists article. Now an experimental test of contamination errors in laboratories from three countries has been published, finding “The rate of spills found in our experiments overlaps and exceeds, by nearly an order of magnitude, the error rate used to drive conclusions in the Site Specific Risk Assessment of the National Bio- and Agro-defense Facility, and the Gain of Function Risk Benefit Assessment.”

There’s an important caveat, however. So far as we know, all of the past epidemics that came from labs (e.g. 1967 Marburg viral disease in Europe, 1979 anthrax in Sverdlovsk, 1977 influenza A/H1N1) were caused by natural pathogens. That’s not surprising, since until recently nobody was doing much pathogen modification in labs. The main modern method was only patented in 2006 by Ralph Baric, who was to have done the chimeric work on bat coronaviruses under the DEFUSE proposal. Without lab modification, only ZW and ZL would be viable hypotheses.

We know, however, that lots of modifications are underway now in many labs. In 2012 Anthony Fauci conceded the possibility that such research might cause an ”unlikely but conceivable turn of events …which leads to an outbreak and ultimately triggers a pandemic”. The dangers were perceived as substantial enough for the Obama administration to at least nominally ban funding research involving dangerous gain-of-function modifications of pathogens.

When that ban was lifted under Trump in 2017, Marc Lipsitch and Carl Bergstrom raised alarms. Lipsitch wrote: “ [I] worry that human error could lead to the accidental release of a virus that has been enhanced in the lab so that it is more deadly or more contagious than it already is. There have already been accidents involving pathogens. For example, in 2014, dozens of workers at a U.S. Centers for Disease Control and Prevention lab were accidentally exposed to anthrax that was improperly handled.” Bergstrom tweeted a similar warning. Ironically, Peter Daszak, head of the EcoHealth Alliance, who became extremely dismissive of the lab leak possibility after Covid hit, gave a talk in 2017 warning of the “accidental &/or intentional release of laboratory-enhanced variants”.

Similar warnings came from China. In 2018 a group of Wuhan scientists, mostly from WIV, wrote “The biosafety laboratory is a double-edged sword; it can be used for the benefit of humanity but can also lead to a ‘disaster.’ ” An extensive 2015 NIH-sponsored “Risk and Benefit Analysis of Gain of Function Research”concluded that lab modified coronaviruses could risk “increasing global consequences” by “several orders of magnitude.”

Perhaps the most authoritative work came from the Global Preparedness Monitoring Board which issued a prescient report from the Johns Hopkins Center for Health Security. Although that report’s many authors include at least one who has emphatically ridiculed any thought that SC2 could have come from accidental release, on Sept. 10, 2019, just before the pandemic started or was known to start, the GPMD report warned

Were a high-impact respiratory pathogen to emerge, either naturally or as the result of accidental or deliberate release, it would likely have significant public health, economic, social, and political consequences. Novel high-impact respiratory pathogens have a combination of qualities that contribute to their potential to initiate a pandemic. The combined possibilities of short incubation periods and asymptomatic spread can result in very small windows for interrupting transmission, making such an outbreak difficult to contain…’

Biosafety needs to become a national-level political priority, particularly for countries that are funding research with the potential to result in accidents with pathogens that could initiate high-impact respiratory pandemics.

It is hard to see how such warnings would make sense if expert opinion held that the recent probability of a dangerous lab leak of a novel virus was negligible. At least since about 2012 the prior probability P0(LL) of escape of a modified pathogen has not been negligible.

Several relevant publications have described successful creations of dangerous lab-modified viruses. A Baric patent application filed in 2015 describes:

“Generation and Mouse Adaptation of a lethal Zoonotic Challenge Virus…. chimeric HKU3 virus (HKU3-SRBD-MA) containing the Receptor binding domain (green color) from SARS-CoV S protein. …. The asterisk indicates Y436H mutation which enhances replication in mice. HKU3-SRBD-MA was serially passaged in 20 week old BALB/c mice … to create a lethal challenge virus. “

Even more directly relevant, one paper including authors from WIV and UNC demonstrated potential for modified bat coronaviruses to become dangerous to humans:

“Using the SARS-CoV reverse genetics system, we generated and characterized a chimeric virus expressing the spike of bat coronavirus SHC014 in a mouse-adapted SARS-CoV backbone…. We synthetically re-derived an infectious full-length SHC014 recombinant virus and demonstrate robust viral replication both in vitro and in vivo.”

This paper prompted a 2015 response in Nature in which S. Wain-Hobson warned “If the virus escaped, nobody could predict the trajectory” and R. Ebright agreed “The only impact of this work is the creation, in a lab, of a new, non-natural risk.” Even in the research paper itself the authors called attention to the perceived dangers: "Scientific review panels may deem similar studies building chimeric viruses based on circulating strains too risky to pursue.” The 2018 DEFUSE and NIH proposals from WIV included plans for just such modifications of coronaviruses.

After SC2 started to spread, even K. G. Andersen, the lead author of the first key paper (“Proximal Origins”) claiming to show that LL was implausible, initially thought “…that the lab escape version of this is so friggin’ likely to have happened because they were already doing this type of work and the molecular data is fully consistent with that scenario.” That view is inconsistent with claims that the prior P0(LL) was extremely small, although it neither quantifies “friggin’ likely” nor establishes how much of “friggin’ likely” would be attributed to priors and how much to molecular data whose analysis may have since changed. Our task here will be to quantify “friggin’ likely”.

Let’s now look at some prior numerical estimates for lab leak probabilities based on records of other lab leaks. Here I will confine the LL hypothesis to one subset, leaks from research along the lines outlined in the DEFUSE proposal. In principle this omits a bit of the LL probability, but not enough to be important. From now on I’ll just use “LL” as shorthand for “DEFUSE-related LL”.

One serious pre-Covid paper estimated the chance of a human transmissible leak at 0.3%/year for each lab. Another careful pre-Covid analysis of experiences of labs using very good but not extreme biosafety practices, BSL-3, estimated that the yearly chance of a major human-transmissible leak was around 0.2% per lab to 1% per full-time lab worker. For a large lab doing much of its work at a much lower safety level (BSL-2) the chances would be higher. For a lab doing work on an extraordinarily transmissible virus the probability would be even higher. According to Shi Zhengli, “coronavirus research in our laboratory is conducted in BSL-2 or BSL-3 laboratories.“

An early exchange among the DEFUSE team members in a draft of the DEFUSE proposal claimed that “The BSL-2 nature of work on SARSr-CoVs makes our system highly cost-effective relative to other bat-virus systems.” The researchers specifically discussed plans to conduct work nominally described as intended to be done under enhanced BSL-3 at UNC instead in Wuhan “to stress the US side of this proposal so that DARPA are comfortable”, as Daszak put it. Baric pointed out “In China, might be growin these virus under bsl2. US researchers will likely freak out.” [sic] Baric has now testified before Congress that he had written Daszak “Bsl2 with negative pressure, give me a break….Yes china has the right to set their own policy. You believe this was appropriate containment if you want but don’t expect me to believe it. Moreover, don’t insult my intelligence by trying to feed me this load of BS.”

There were even U.S. State Department cables warning specifically that bat coronavirus work in Wuhan faced safety challenges, indicating that the Wuhan estimate should be raised compared to those for generic labs. WIV had previously demonstrated the ability to generate new strains that gave viral titers in human airway cells enhanced by over a factor of 1000 compared to the starting natural strains, ultimately leading Health and Human Services to ban WIV from receiving funding.

We can make a crude estimate that if DEFUSE-like work was started at WIV then

P0if(2019, LL) = ~ 1/100. I think that would be a major underestimate of the probability that an easily transmissible virus would leak from a BSL-2 lab working under conditions about which “US researchers will likely freak out.” I’ve arrived at that probability by implicitly considering another factor. Although WIV had previously succeeded in making a novel coronavirus with “potential for human emergence” (as had labs working with novel flu viruses) we do not know for sure that the DEFUSE plan would have succeeded in its attempt to make a human-transmissible virus. The possibility of failure needs to be factored in. It’s also hard to estimate how the probability of a human-transmissible lab virus being able to cause a pandemic compares with that of human-transmissible natural viruses. My lack of expertise on these factors contributes to the large uncertainty in the priors. I would welcome estimates from disinterested virologists of the probability that a DEFUSE-like plan would not have succeeded well enough to make a problematic virus.

We do not know for sure that such work was started, but we do know that shortly after DEFUSE was turned down WIV received major funding from the Chinese Academy of Sciences for a similar but more vaguely worded proposal and that Shi Zhengli declined to answer Der Spiegel’s question about whether the work had started. Again in the spirit of crude estimates, let’s conservatively say that there’s about a 50% chance the work proceeded. (This is an underestimate, given that Baric testified before Congress concerning “evidence that they [WIV] were building chimeras”.) We then have our starting point:

P0(2019, LL) = ~ 1/200.

This gives starting odds

P0(2019, LL)/P0(2019, ZW) = ~1/70.

Taking the log gives

L0 = ln(P0(2019, LL)/P0(2019, ZW)) = ~-4.2.

This estimate is obviously very rough, especially because of uncertainties about the lab. Let’s say that we could fairly easily be off by a factor of 10. Although each subsequent likelihood ratio adjustment has its own uncertainty, the uncertainty of these prior odds will be the most important one. Our prior is then equivalent to

L0 = -4.2 ± 2.3.

where the ±2.3, equivalent to the factor of 10, is meant to roughly show the standard error in estimating the logit. A standard error of 2.3 allows and even requires that errors outside the ±2.3 range are possible, although not very probable. “4.2” is not meant to convey false precision, just to translate our rough estimates into convenient units.

Where and What: Sarbecovirus starting in Wuhan

Now let’s take the first obvious pieces of evidence—the pandemic was caused by a sarbecovirus and started in Wuhan. By limiting our LL account to the DEFUSE-like subset, we’ve made one calculation trivial: P(Wuhan, sarbecovirus|LL) = ~1 since our restricted version of LL already specifies those with near certainty. In other words, LL has already paid the probability price of being restricted to a narrow version and thus avoids any likelihood cost of outcomes implied by that limited version. (One could even define the viral type more specifically as sarbecoviruses with 10-25% sequence differences of the spike protein from SC1, as specified in the NIH proposal. SC2’s spike sequence differs from SC1’s by 23%.) The more interesting question is then what’s P(Wuhan, sarbecovirus|ZW). We can make a first approximation that the location and pathogen type are independent, then refine that in more detail.

What is ln(P(sarbecovirus|ZW))? We can estimate it roughly from there being one sarbecovirus in the 19 listed emerging pathogens. (Including the specified difference from SC1 would lower the probability further, as would inclusion of the FCS.) Using the method described in Appendix 3, we obtain

L1 = 2.65 ± 0.8 →logit1 = 2.3.

(Here for the ZW account we have in the combined prior and likelihood factor in effect ignored the non-sarbecovirus diseases and just used an estimate of one sarbecovirus outbreak per about 50 years.) The uncertainty is large because the ZW statistics are based on rare events, so this likelihood ratio is noticeably discounted. Again, these numbers are not meant to convey false precision. (For this term, with ln(P(sarbecovirus|LL))=0, properly separating the integrals over uncertainties in the two likelihoods would have no effect.)

What is ln(P(Wuhan|sarbecovirus, ZW))? Here things become a little more subtle because different pathogens are likely to arise in different places. We can start with a first approximation, that since Wuhan has ~0.7% of China’s population and ~1.1% of the urban population P(Wuhan|sarbecovirus, ZW) =~0.01, perhaps uncertain to about a factor of 2. That would give:

L2 = 4.6 ± 0.7 →logit2 = 4.4.

(For this term, with ln(P(sarbecovirus|LL)) not much less than zero, properly separating the integrals over uncertainties in the two likelihoods would have very little effect.)

Is there any reason to think that Wuhan would be a particularly likely or unlikely place compared to that simple population-based estimate? A recent paper working entirely within the ZW framework argues that SC2 is a fairly recent chimera of known relatives living in or near southern Yunnan, and that transmission via bats is essentially local on the relevant time scale. More detailed recent work fully confirms that conclusion and further narrows the location to “southern Yunnan, northern Laos and north-western Vietnam”). Wuhan is sufficiently remote from those locations that WIV has used Wuhan residents as negative controls for the presence of antibodies to SARS-related viruses. Thus Wuhan residents are not particularly likely to pick up infections of this sort from wildlife.

For the market branch of the ZW hypothesis, ZWM, the likelihood drops even more since it has a much smaller fraction of the wildlife trade than of the population. The total mammalian trade in all the Wuhan markets was running under 10,000 animals/year. The total Chinese trade in fur mammals alone was running at about 95,000,000 animals/year (“皮兽数量… 9500 万”). For raccoon dogs, for example, the Wuhan trade was running under 500/yr compared to the all-China trade of 1M or more, 12.3 M according to a more recent source. The Wuhan fraction was then at most about 1/2000. We can also compare the nationwide numbers for some food mammals with those of Wuhan. For the most common (bamboo rats) Wuhan accounted for only about 1/6000, apparently largely grown locally, far from sources of the relevant viruses. For wild boar Wuhan accounted for less than 1/10,000. Wuhan accounted for a higher fraction (1/400) of the much less numerous palm civet sales, but none were sold in Wuhan in November or December of 2019. It seems P(Wuhan|ZWM) would be much less than 1/100, something more like 1/1000. We may check that estimate in an independent way to make sure that it is not too far off. In response to SC2 China initially closed over 12,000 businesses dealing in the sorts of wildlife that were considered plausible hosts. Many of these business were large-scale farms or big shops. With only 17 small shops in Wuhan we again confirm that Wuhan’s share of the ZWM risk is not likely to be more than 1/1000, distinctly less than the population share of 1/100.

Future work, of which I’ve seen only crude preliminary versions, should separate out each different species to see what fraction of the market sales occurred in Wuhan specifically in late 2019 for species with probable SC2 susceptibility and sources near Yunnan, if any such species exist.

The tiny fraction of the wildlife trade that is found in Wuhan means that the specific market version ZWM has much steeper odds to overcome than non-market ZW accounts would have. It will help to keep this in mind as we see further evidence that the specific market spillover hypothesis runs into other major difficulties.

Sanity Check

At this point of the analysis the combined point estimate of the logit is ~2.5 which would give odds about 12/1 favoring lab leak. When we consider how uncertain that estimate is, averaging over the range of reasonable priors, the odds would drop to about 4/1. Does that agree with other ballpark estimates?

The lead author of Proximal Origins, Andersen, wrote his colleagues on 2/2/2020 “Natural selection and accidental release are both plausible scenarios explaining the data - and a priori should be equally weighed as possible explanations. The presence of furin a posteriori moves me slightly more towards accidental release, …” Based on general priors but without specific knowledge of the DEFUSE proposal and before taking into consideration the more detailed data such as the FCS, Andersen thought the probabilities were about equal. Our odds, taking the existence of DEFUSE into account, are only a bit higher than Andersen obtained without knowledge of DEFUSE.

Demaneuf and De Maistre looked in detail at past evidence for various scenarios of natural and lab-related outbreaks. They consider ZL accounts, but the factors that go into whether there’s a research leak are largely the same as for LL, with the difference arising more from factors we have not yet included. Without considering sequence features beyond that the virus is SARS-related they conservatively estimate that the lab-related to non-lab-related odds for an outbreak in Wuhan, P(ZL|Wuhan)/P(ZW|Wuhan), are about one-to-one. Their base estimate, for which they make no special effort to lean either way, is about 4/1.

A collection of other Bayesian estimates described as “priors” but corresponding to this point in my analysis were made in response to a formal debate and presented on Scott Alexander’s blog. These odds estimates for this point of the analysis ranged from 4.4 to 165, as shown in the table in Appendix 1. All but one of the estimates were made by people who considered a lab leak unlikely. My estimate is thus on the low side of this range. Once again we see that there’s nothing eccentric about the general range of odds I obtain just from the broadest what/when/where considerations. They are in fact conservative.

The key papers arguing for zoonosis

Proximal Origins

Now let’s look at the three main papers on which claims that the evidence points to ZW rest. The first is the Proximal Origins paper, whose valid point was that ZW was at least possible. Its initially submitted version concluded logically that therefore other accounts were “not necessary”. That conclusion is implicit in all the Bayesian analyses, which neither assume nor conclude that P(ZW)=0.

The final version of Proximal Origins changed that conclusion under pressure from the journal to the illogical claim that therefore accounts other than ZW were “implausible”. To the extent that the paper had an argument for LL being implausible it was based on the assumptions that a lab would pick a computationally estimated maximally human-specialized receptor binding domain rather than just a well-adapted human receptor binding domain and that some of the modern methods of sequence modifications would not have been used. Neither assumption made sense, invalidating the conclusion. Defense Department analysts Chretien and Cutlip already noted in May 2020: “The arguments that Andersen et al. use to support a natural-origin scenario for SARS CoV-2 are not based on scientific analysis, but on unwarranted assumptions.”

The later release of the DEFUSE proposal further clarified that the precise lab modifications that Proximal Origins argued against were not ones that WIV had been planning. The DEFUSE proposal described adding some “human-specific” proteolytic site, not a special computationally optimized one, emphasizing the protease furin but also mentioning others. The particular “RRAR” amino acid sequence for the FCS that Proximal Origins argued would not have been used is a fairly obvious candidate for a known human proteolytic cleavage site that works well for furin but also works for some other proteases, since as Harrison and Sachs point out: “The FCS of human ENaC α has the amino acid sequence RRAR'SVAS…that is perfectly identical with the FCS of SARS-CoV-2.” That may well be an accident, but it’s a reminder that the FCS looks similar to the sort that DEFUSE proposed. (RRAR was also identified as a putative coronavirus proteolytic cleavage site at the S1/S2 boundary in previous work at WIV.) Recently, Deigin has provided a detailed account of the FCS work from members of the DEFUSE team and their close collaborators, showing that the PRRAR site would be an unsurprising choice given the group’s work on a deadly feline coronavirus that sometimes uses exactly that amino acid sequence. Recently, it has been found that the only other known occurrence of the particular nuclear localization site pattern that includes the PRRXR segment and is flanked by a pair of O-glycolites is not in a natural virus but in an “artificial MERS infectious clone”. Nothing about SC2 at the level of detail of these first looks points strongly toward LL or ZW.

As further confirmation, we now know that even weeks after Proximal Origins was published its lead author did not have confidence in its conclusions or even believe its key arguments. On 4/16/2020 Andersen wrote his coauthors : “I'm still not fully convinced that no culture was involved. If culture was involved, then the prior completely changes …What concerns me here are some of the comments by Shi in the SciAm article (“I had to check the lab”, etc.) and the fact that the furin site is being messed with in vitro. … no obvious signs of engineering anywhere, but that furin site could still have been inserted via gibson assembly (and clearly creating the reverse genetic system isn't hard -the Germans managed to do exactly that for SARS-CoV-2 in less than a month.” Thus Proximal Origins contains nothing that would lead us to update our odds in either direction.

Phylogeny and location: Pekar et al. and Worobey et al.

The next papers involve phylogenetic data and intra-city location data. The likelihood factor for their combination does not factorize into separate contributions. The reason is that the locations data were used to support one particular version of the ZWM hypothesis and the phylogenetic data make that particular version implausible although on their own they would say little to disfavor the general ZW hypothesis. The core of the tension is that the viral sequences of the market-linked cases are farther from the sequences of the wild relatives than are sequences of other cases.

Pekar et al. argued based on computer simulations of a simplified model of how the infection would spread that the presence of two lineages (A and B) differing by two point mutations in the nucleic acid sequence without reliably identified intermediate cases was unlikely if all human cases descended from a single most recent common ancestor (MRCA) that was in some human. They claimed incorrectly to obtain Bayesian odds of ~60 favoring a picture in which the MRCA was in another animal shortly before two separate spillovers to humans. Simply correcting multiple explicit mathematical and coding errors in their analysis changes the odds to around 4/1 favoring a single spillover, as discussed in Appendix 2.

At any rate, there is no obvious reason why getting two closely related strains from having an MRCA in some other animal a few transmission cycles before two spillovers to humans would say much about whether the other animal was a standard humanized mouse in a lab or an unspecified wildlife animal in a market. For example, multiple workers were exposed to Marburg fever in the lab and the Sverdlovsk anthrax cases included multiple strains. In the most relevant case, SARS spilled over in “four distinct events at the same laboratory in Beijing.” DEFUSE itself described planned work with quasi-species, collections of closely related strains, rather than purified strains. Thus further discussion of the Pekar et al. model seems irrelevant to our central question, but I still include a discussion in Appendix 2 about some of the major technical problems of the paper just for its implications for the reliability of major publications. (If there were evidence for multiple spillovers that might tend to reduce the likelihood of the difficult direct bat to human route regardless of whether or not that involved research, as discussed in Appendix 5.)

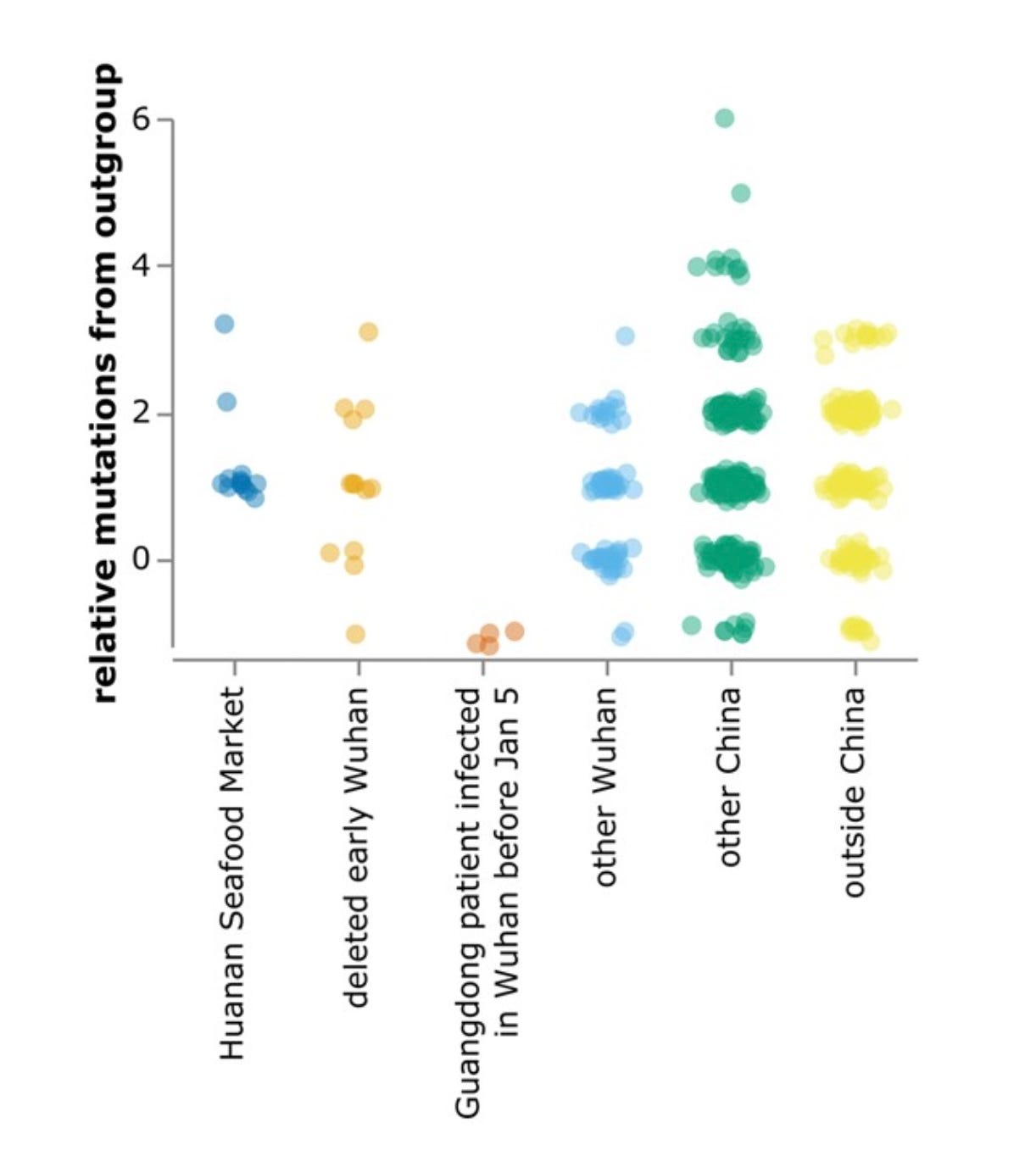

Let’s step back from opaque, assumption-laden, error-ridden modeling that seems approximately irrelevant to our ZW vs. LL comparison to look at what the lineage data seem to say prima facie. (Jesse Bloom and Trevor Bedford wrote a convenient introductory discussion.) Lineage A shares with related natural viruses the two nucleotides that differ from B. Thus lineage A was the better candidate for being ancestral, as Pekar et al. acknowledged. Pekar et al. describe 23 distinct reversions out of 654 distinct substitutions in the early evolution of SC2. Naively, the chance that when two lineages are separated by two mutations (2 nucleotides, “2nt”) both those mutations would be reversions is then roughly (23/654)2 = 0.00124 = ~1/800. A more detailed calculation of the probability using data from Pekar et al. on frequencies of different reversion types gives a slightly lower value, as discussed in Appendix 2. At this point the conclusion that B was not ancestral to A tells us nothing about P(LL)/P(ZW), but it will become important when integrated with information about locations of early cases and early viral traces.

The 12 early cases with known market linkage and known lineage were of lineage B, not A. Lineage A was almost entirely absent from the main suspected site of the wildlife spillover, the Huanan Seafood Market (HSM). Although multiple traces of B were found in HSM, traces of A were found only on one glove, with additional mutations indicating that it was not from an early case. This one late-sequence trace out of 923 samples is easily consistent with contamination from sampling conducted well after both lineages had become widespread in Wuhan, similar to the one sample out of 30 that tested positive at the Dongxihu Market. Thus the sequence data indicate that lineage A was quite unlikely to have originated at HSM. This conclusion applies whether or not the spillover that led to lineage A was the only one or whether there was a separate spillover to lineage B.

Both Kumar et al. and Bloom have analyzed the phylogenetic data, concluding that the MRCA was, if not A itself, likely to differ from A by an additional nt shared with wild relatives but not with B. (There is some reason to doubt that conclusion since A differs from the main suspect by a T→C mutation, much less common at this stage than a C→T mutation, although non-reversionary mutations are much more common than reversionary ones.) Bloom notes that cases were already being reported by mid-November 2019 and Kumar et al. estimate that the MRCA was probably present in Oct. 2019, with the first spillover case likely to have occurred weeks earlier. A later analysis from the Kumar group using updated techniques and more complete data places the date “in mid-September to early-October 2019”. Bloom finds more early lineage A at multiple locations away from the market, including other parts of Wuhan, other parts of China, and other countries, as seen in his Fig. 4, reproduced below. The phylogeny data thus seem inconsistent with HSM being the only spillover site, since a lineage closer to the ancestral relatives was spreading widely around the time the less-ancestral lineage showed up at HSM.

A thorough new Chinese paper on the phylogeny issue that uses the most complete data came out in early 2024. The Zhang group finds no evidence of discontinuous evolution, i.e. they found clean sequences that are intermediate between A and B. Their existence tends to undermine the premise of Pekar et al. although it does not prove that the intermediate sequences were upstream of B. They conclude, contra Pekar, that a single spillover is most likely. (As discussed in the Appendix, the same conclusion follows even without those sequences just from fixing explicit math errors in the Pekar et al. paper.) They find four plausible candidates for the MRCA, including the two Bloom pointed to, one of which is also the one preferred by Kumar et al. They write “In sum, although multiple lineages of SARS-CoV-2 were co-circulating during the early period of the COVID-19 epidemic, they still exhibited the evolutionary continuity. All of them may have evolved from one common ancestor, probably lineage A0 or a unidentified close relative, and jumped into human via a single zoonotic event.” No version of lineage B is included as a plausible MRCA. [Zhang was then locked out of his lab, closed for alleged safety reasons, but now has been let back in.]

A paper by Samson et al. now uses a more complete data set to estimate the spillover time via the MRCA giving a value “between August and early October 2019”. (A peculiarity in one figure, showing RATG13 as descended from an ancestor dated after RATG13 was reported to be sampled, is a reminder of the perils of taking these analyses too seriously.) The later part of that range agrees generally with the estimates from the Kumar and Bloom groups, although the 2022 Pekar model and others referenced in it based on more limited data sets estimate a later time.

At any rate, the MRCA timing does not settle the question of whether the MRCA was in a human or only in some prior natural or lab host followed by more than one spillover. Data on early human cases is more relevant, but as we shall see they aren’t completely clear-cut.

Although the possibility of artifacts cannot be excluded, La Rosa et al. found wastewater evidence that SC2 started to show up substantially in northern Italy by December 18th 2019. (Fongaro et al. found positive wastewater test for November 27 in Brazil, although probably from contamination since there were mutations that only appeared later than that.) If the seemingly careful Northern Italy report is correct the spillover would have had to have been more in the range estimated by the Kumar and Bloom groups rather than the late estimate of the 2022 Pekar paper.

A published report in National Geographic also indicates that SC2 was becoming widespread in Wuhan by November 2019: “In early January, when the first hazy reports of the new coronavirus outbreak were emerging from Wuhan, China, one American doctor had already been taking notes. Michael Callahan, an infectious disease expert, was working with Chinese colleagues on a longstanding avian flu collaboration in November when they mentioned the appearance of a strange new virus. Soon, he was jetting off to Singapore to see patients there who presented with symptoms of the same mysterious germ.” Likewise, the prominent Dutch viral gain-of-function researcher R. Fouchier reported (see 1:50 here) hearing of the new outbreak in the first week of December, 2019.

Data from several search engines also indicate early spikes in interest in some SARS-like infection. On Dec. 1-3 2019 there was a particularly noticeable spike in searches for “SARS” on the Chinese WeChat site, apparently representing thousands of searches. Other early blips from Chinese cities do not have publicly quantified absolute magnitudes.

The earlier time estimated from these other lines of evidence is inconsistent with the account in which cases start with a spillover at HSM in early December 2019. I do not use that as evidence against the HSM account because the sequence-based timing estimates have big uncertainty and the other evidence isn’t quite airtight.

The point of the Pekar et al. paper seems to have been that the absence of traces of early lineage A in HSM does not rule out the possibility that HSM was a spillover site for lineage B since lineage A could have spilled over elsewhere or perhaps also at HSM but leaving neither detected cases nor early-case RNA there. That possibility does not require that the probability of having had just one spillover is very small, just that the probability of having had more than one is not very small. Thus although the many errors in the Pekar et al. paper, discussed in Appendix 2, invalidate its conclusion that a unique spillover was highly improbable the lineage results are still at least somewhat compatible with a multi-spillover picture including one at HSM.

We’ve looked at whether the sequences found in the HSM were reasonably compatible with that being the only spillover site (they weren’t) but we haven’t made the equivalent test for WIV. Depending on what sequences were there, one could end up with a Bayes factor either favoring ZW or LL.

We know that the DEFUSE proposal claimed WIV had more than 180 relevant coronavirus sequences, apparently including many unpublished ones [7/2/2025 unfortunately that video of Alice Hughes saying that from her experience in Wuhan there were many unpublished sequences has been taken offline.]. Unfortunately we have little information about those. Publication of newly gathered sequences seems to have abruptly stopped with those gathered in early 2016, according to the data I’ve been provided. In Sept. 2019 WIV started removing public access to its sequence collection, finishing early in the pandemic. Daszak has now conceded in Congressional testimony that WIV continued gathering samples after the last reported ones. Daszak further conceded "So, is it possible they [WIV] have hidden some viruses from us? That we don't know about. Of course." Proximal Origins author Holmes noted “I’m pretty sure that groups in China are sitting on more SC-2 like viruses….It’s striking to me that CCDC have published so little on this yet have supposedly sampled so many animals. This doesn’t add up. Never discount the politics.” In particular, it seems unlikely that WIV has published the full set of sequences they obtained in 2012 from miners who fell ill with bat-derived coronavirus from Mojiang in southern Yunnan.

Y. Deigin discusses further omissions from public disclosure of what sequences were known as well as of when and where they were obtained. A related account of missing data has appeared in the press.

Some people nonetheless consider the lack of evidence for a close match of a WIV sequence to SC2 as indicating that SC2 was unlikely to come from WIV. Others have said it’s just from reflexive bureaucratic secrecy with no particular implications. Others have read the missing-data situation as indicating a systematic cover-up of some embarrassing sequence data. Support for the latter interpretation may be found in a note dated 4/28/2020 from Daszak: “ …it’s extremely important that we don’t have these sequences as part of our PREDICT release to Genbank…. having them as part of PREDICT will being [sic] very unwelcome attention…”

The official explanation of why the data base was taken offline was that it was being hacked. It seems to me that it would have been easy and inexpensive to make copies on some standard read-only media and distribute these to many dozen labs and libraries around the world. That would have made the information available without allowing hackers to modify anything without a massive worldwide conspiracy. A narrower distribution to carefully selected institutions allowing only on-site use could have not only prevented modifications but also minimized unauthorized access, although it is difficult to see why maintaining priority in using these research results would be important enough to justify the suspicions created by concealing them. An evaluation of the likelihoods under ZW, ZL, and LL of the removals of various sorts of data from Wuhan and the inconsistencies between various statements of prominent virologists might be an interesting project for a social scientist, but not one I will use to update here.

Although fully knowing what sequences were in Wuhan labs would be almost equivalent to answering the origins question one way or the other, our current estimate of what’s there would mostly just be based on the other evidence leaning toward LL, ZL, or ZW, augmented a bit by a highly subjective sense of how forthright people are likely to be. We don’t want to either double-count our other evidence or introduce especially subjective terms. No update is justified, at least without explicitly considering the probability that the data would have been hidden if it contained nothing suggesting a lab leak. To summarize in more formal-looking language that some readers have indicated they prefer:

ln(P(no known backbone sequence, multiple types of hidden data|LL)/P(no known backbone sequence, multiple types of hidden data|ZW)) = ~0±big uncertainty.

In combining the lineage and case location data we can simplify a bit by using one point on which there is unanimity– if there were more than one spillover either all or none were lab-related. Is there evidence that lineage B spilled over to humans at HSM? If so, that would support ZWM despite its otherwise low odds due to Wuhan’s extremely small fraction of China’s wildlife trade.

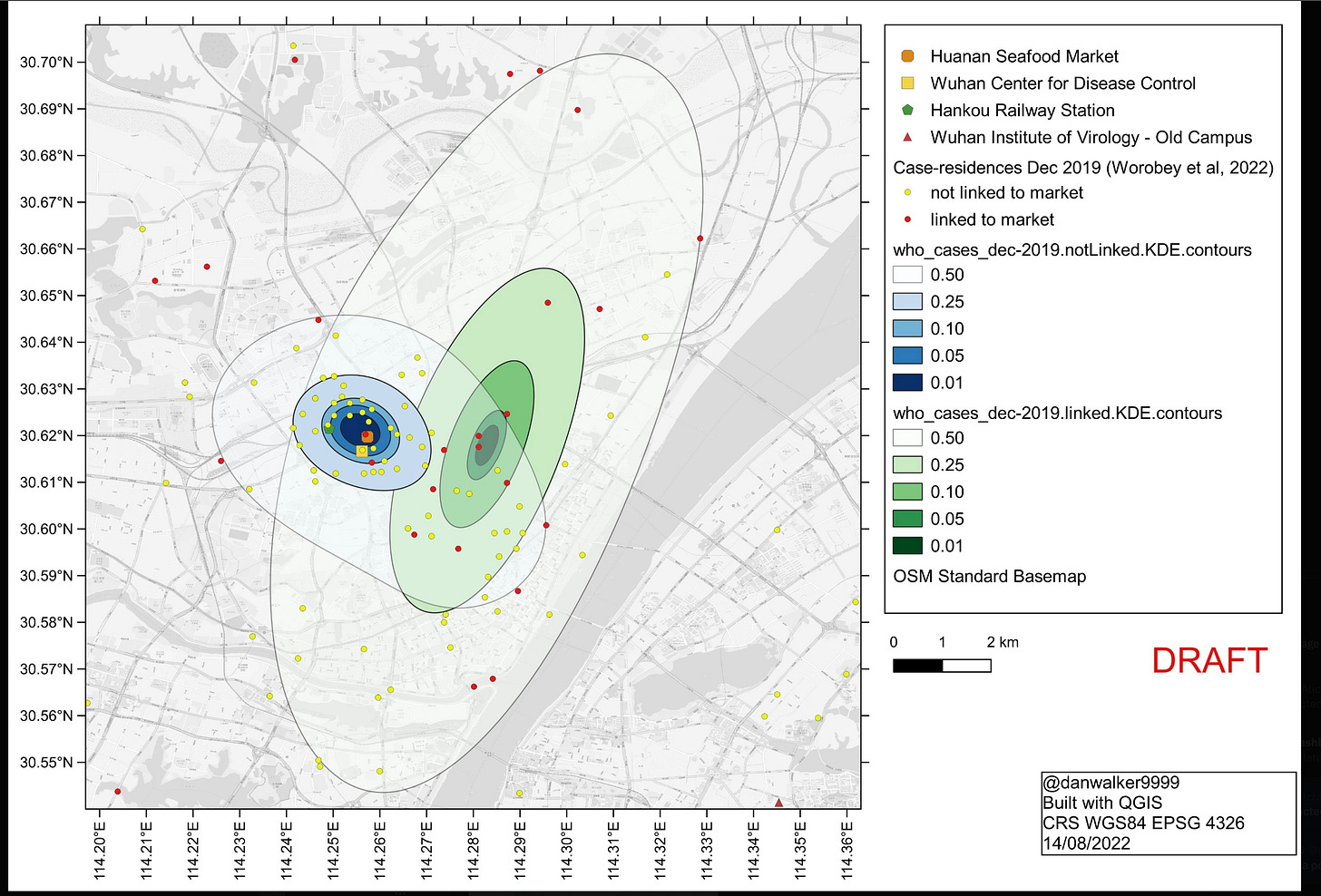

The widely publicized paper by Worobey et al. used case location data to argue that HSM was not just a superspreading location but also the location of at least one spillover to humans. Worobey et al. argue that since there were hundreds of plausible superspreading locations it would require a remarkable coincidence, with probability ~1/400, for a possible spillover site, HSM, to be the first ascertained spreading site unless it were the actual spillover site. Of the major arguments (other than priors) supporting ZW, I think this is the only one that looks plausible on first inspection. While the argument sounds reasonable, one can get a preliminary empirical feel for how much of a coincidence that would be by looking at the first notable ascertained outbreak in Beijing some 56 days after initial cases were controlled. It occurred at the Xinfadi wet market, which could not have been the site of the months-earlier spillover. In Singapore, the “biggest Covid-19 community cluster” was found at the Jurong seafood market. In Thailand, the biggest outbreak was at the Mahachai Klang Koong seafood market. In Chennai, India, the biggest ascertained spread was at the Koyambedu vegetable market. Apparently first ascertainment of spread of a pre-existing human virus is not so unlikely to be located at a wet market. Given that the previous related disease, SC1, was known to have spilled over from wildlife one would expect the probability of the first official ascertainment of SC2 spread in Wuhan to be even more tilted toward market cases than would that of later ascertainments, when human-to-human transmission was known. Evaluating whether the market proximity supports ZWM requires a closer look.

Worobey et al. do not cite any relevant instance in which the sort of case-location data analysis they used identified the source of an epidemic. In the closest historical analogy I can think of, John Snow’s famous 1854 map-based identification of a water pump as a cholera source, people from infected households had walked from their houses to the pump. Even for Snow the most convincing evidence for water-borne disease causation was not spatial distribution of a cluster around the pump, subject to multiple confounders, but rather correlation with the pseudo-random spatially mixed distribution of water from two companies, only one of which was polluted. Unfortunately an analog of one of his most convincing pieces of evidence, reduction of the disease cluster round the pump right after its handle was removed, is not available for SC2. To the extent that such a temporal correlation is available, it points toward DEFUSE LL, unfortunately due to the timing of the onset rather than the timing of a reduction.

The case data Worobey et al. used omitted about 35% of the clinically reported known cases, perhaps ones that were not PCR-confirmed. Omission of cases can be a serious problem for an analysis based on spatial correlations. (Proximal Origins author Ian Lipkin described the Worobey et al. analysis as "… based on unverifiable data sets…") The collection of clinically reported cases and of ones then PCR-confirmed already was biased because proximity and ties to HSM were used as criteria for detecting cases in the first place. I now include in Appendix 2 an argument that Worobey at al. themselves present evidence that proximity-based case ascertainment bias was too large to allow proximity-based inference about the origins.

Those most familiar with the case data, including prominent zoonosis advocates, had noticed the ascertainment problem. Virologist Jeremy Farrar explained “That tight case definition resulted in an Escher’s loop of misguided circular reasoning: testing only those people with a link to the market created the illusion that the market was the source of disease, because everyone testing positive had been there. In reality, the net should have been cast wider… “ In his congressional testimony Baric summed up the impressions of many informed scientists: “Clearly, the market was a conduit for expansion. Is that where it started? I don’t think so.”

Even aside from the severe ascertainment bias, re-analysis using standard spatial statistical methods by Stoyan and Chiu, experts in such techniques, showed that the statistics used could not identify HSM as the starting location. One problem was that other key sites were also inside the cluster region, including a CDC viral lab and the Hankou railway station. In addition to the more technical re-sampling statistical analysis, the re-analysis made the obvious point that in a modern city infections do not spread symmetrically in a short-range local pattern but follow other routes, e.g. commuter lines. A paper that Worobey et al. cite specifically shows extremely anisotropic movements around Wuhan, pointing out that “The intra-urban movement of individuals is affected by a number of factors, such as …mode of transportation, transportation networks….” Analysis of the spread of Covid in New York City concluded “The combined evidence points to the initial citywide dissemination of SARS-CoV-2 via a subway-based network, followed by percolation of new infections within local hotspots." The Hankou station is on Metro Line 2, which connects directly to the stop nearest WIV.

A report from the WHO and the Chinese CDC looking at the case location data concluded “Many of the early cases were associated with the Huanan market, but a similar number of cases were associated with other markets and some were not associated with any markets….No firm conclusion therefore about the role of the Huanan Market can be drawn.” That agrees with an extensive analysis by Demaneuf detailing the serious obstacles to inferring a spillover location from the sparse non-randomly selected case locations. In an interview with the BBC (start at 23:40) George Gao, head of China’s CDC, acknowledged that there was intense ascertainment bias so that “maybe the virus came from other site”. [The audio is unclear whether the word is “site” or “side”, but Gao later told B. Pierce that he meant ”site”, less suggestive of WIV than “side” would be.]

Worobey et al. include a map of locations of requests to the Weibo web site for assistance with Covid-like disease, which provides a way of looking at the location distribution within Wuhan without selective omission of cases. The earliest Weibo map Worobey et al. present shows a tight cluster near to but not centered on HSM. Instead it clusters tightly more than 3 km southeast on a Wuhan CDC site (not part of WIV) where BSL-2 viral work was done. Just before the time of the first officially recorded cases the CDC opened a new site within 300m of HSM, indistinguishable from the HSM site via the sorts of case location data used in Worobey et al.

More relevant to the question of the original spillover, the paper that provided the Weibo map also had a map of Weibo data prior to 1/18/2020. By far the largest cluster of early reports in this early data set is close to the WIV on the south side of the Yangtze, as shown in this version of that map from a Senate report that includes WIV and HSM locations. Such maps cannot reliably point to the spillover site.

Levin has now done extensive modeling of the early case home addresses combined with the approximate case times. This allows the use of more conventional modeling methods for infectious disease spread. Focusing on modeling the unlinked cases, he finds a Bayes factor of 27 favoring a spillover from research on the south side of the Yangtze over spread from the HSM. I suspect there maybe a missing factor favoring ZWM, however, because the model seems to assume that an HSM-linked cluster is to be expected under LL. While we have seen, based on other cities, that it is not extremely surprising under LL it certainly has a probability less than 1. Roughly speaking, at least for now, the combined case home-address-time data do not give a clear Bayes factor favoring either ZWM or LL. That conclusion may change with further modeling, but as we shall see unless it changes substantially ZWM will already be disfavored over less specific ZW versions.

Worobey et al. present another argument— that the distribution of SC2 RNA within HSM pointed to a spillover from some wildlife there. If correct, that argument would be more directly relevant to whether a spillover occurred at HSM than are the locations of selected cases after Covid became more widespread.

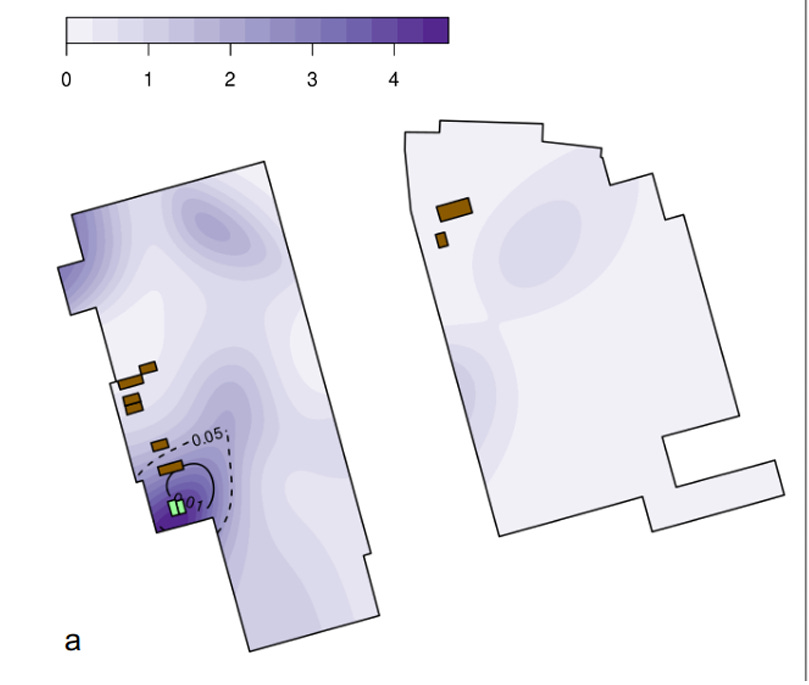

The positive SC2 RNA reads did tend to cluster in the general vicinity of some of the HSM wildlife stalls, even after correcting for the biased sampling that focused on that area. That area, however, is also where bathrooms and a MahJong/cards room are located, both likely spreading sites. Demaneuf documents evidence from several Chinese and Western sources that the early market cases were largely of old folks who frequented the stuffy little crowded games room. A finer-grained map using the Worobey data and their heat-map method (reproduced below) showed the hot spot for the proportion of samples that test positive to be centered on the bathroom/games spot, although one wildlife stall is also close by. (Zach Hensel reports that there’s also another right next to the bathrooms, although the Worobey paper only shows an “unknown meat” stall rather than another live animal stall.) Here the bathrooms are shown in green and the wildlife stalls in brown.

.

In what should have been a short-lived coda, there were many press stories that SC2 RNA found in a stall with DNA of a raccoon dog showed that species to be the intermediate host. The presence of wildlife in the market was not news– it is implicit already in our priors. The question was whether there was some particular connection between that wildlife and SC2. When Bloom went over the actual data for the individual samples, he found that particular sample had almost undetectable SC2 RNA, far less than many others. Overall, sample-by-sample SC2 RNA correlated negatively with the presence of DNA from possible non-human hosts. In contrast, for four of the five actual wildlife-infecting viruses their RNA correlated strongly positively with the corresponding animal DNA, with too little RNA to determine for the fifth. A newer analysis by Bloom again indicates no support for an HSM wildlife spillover.

A new paper with many of the same authors as Worobey et al. has again presented much of the same data, although a bit more tentatively with regard to the two-spillover claim. It adds some sequence data on several HSM mammals and some of their non-SC2 viruses. The raccoon dog DNA seems consistent with the local wild animals, consistent with previous reports that these were the source. Those local populations tested negative for SC2-like viruses. No evidence was reported that any potentially susceptible species was sourced from Yunnan or further south. For a detailed though still rough and inconclusive summary of what’s known about the sourcing, susceptibility, location, etc. of HSM wildlife and wildlife products, see these sites. In brief, it now looks like no species was sourced near Yunnan, sold live in HSM in the relevant months, and able to propagate SC2. At the moment it seems that no species is known to meet even two of those criteria.

Levin has also done standard spatial modeling of the swab results, obtaining a Bayes factor of 3.2 favoring a model that omits any dependence on proximity to stalls selling raccoon dog over one in which that proximity matters. This approximate result is close to what one would get from Bloom’s analysis just by counting how likely it was for other detected animal coronaviruses to fail to show positive correlation with their host mtDNA. Thus the internal SC2 RNA data by themselves make it moderately unlikely that wildlife had any direct connection with SC2 spread in HSM.

The absence of any tendency of wildlife vendors to be infected is a sharp contrast with SC1 results. In HSM, none of the reported infections were of wildlife vendors. Levin has compared models for the location of stalls of cases that either come from proximity to raccoon dog stalls or from proximity to recent prior cases. He obtains a Bayes factor of 12 favoring the model in which raccoon dog stalls are irrelevant. I suspect that this factor is exaggerated because it doesn’t include models in which cases initially come from wildlife and then shift to coming from other human cases. The lack of any significant connection of the case stall locations with wildlife proximity should nonetheless provide another small Bayes factor disfavoring a wildlife source.

As CDC head Gao concluded, “At first, we assumed the seafood market might have the virus, but now the market is more like a victim. The novel coronavirus had existed long before”. Gao’s conclusion is consistent with the prior likelihood of Wuhan being the location of a market spillover already being far less than 1% because Wuhan markets sold less than 0.01% of the Chinese mammalian wildlife trade.

Nonetheless, to be conservative I will not include a Bayes factor disfavoring the general ZW hypothesis at this point, since markets are not the only path by which viruses can spill over. It is not clear from pre-pandemic warnings what fraction of the ZW prior probability should be assigned to ZWM but unless that probability were close to 1, even eliminating the ZWM possibility would not have a large effect on the net ZW odds.

Summary on the market sub-hypothesis, ZWM